29 Feb LLaMB’s Dynamic Prompts: unlocking magic without the dark arts

This is part one of a series of posts where we seek to explain the breakthrough features of the LLaMB framework which solves the various challenges in building Generative AI solutions in the enterprise.

Prompt engineering in the enterprise – today

A huge topic that always comes up with customers attempting to utilize Microsoft, Open AI or any other LLM APIs, as well as emerging developer tool kits, revolves around the complexities and various nuances of prompts. Prompt engineering entails crafting and refining written instructions to guide AI models to consistently generate the most desirable outputs. Prompt engineers use text commands to communicate with AI, letting AI execute tasks. However, generating a prompt that consistently generates the precise, personalized, well-formatted result is almost a dark art that requires strong linguistic skills, a solid grasp of language intricacies, and a keen ability to apply these linguistic nuances judiciously in different contexts, often very specific with each LLM and version of LLM. In the enterprise context, this will also involve understanding and interpreting data formats and making sure domain specific context is considered while crafting these prompts.

The questions we hear about prompts from our customers:

Why do I have to build different prompts for each LLM and sometime even versions of LLM?

What measures do you have to prevent prompt hacking?

How do I create prompts within my corporate data policy rules?

How do I manage context length effectively when I create prompts with enterprise data?

How do I eliminate hallucinations via prompts?

How does one ground a prompt for enterprise data?

How do I ensure I can get always get the source links?

How do I make sure my enterprise brand is protected?

“Leonardo DaVinci hard at work as a prompt engineer” –Midjourney

The question: Can we eliminate this prompt hurdle?

Creating and designing prompts that produce consistent outputs, handling tens of thousands of requests daily with precision and accuracy, is a non-trivial task. Prompt engineering is exceptionally challenging; it represents the most significant obstacle to delivering a usable enterprise-wide generative AI agent. At first glance, it may seem straightforward – simply coax the Large Language Model (LLM) into responding exactly as desired. However, this task is unexpectedly difficult. There are numerous aspects involved in fine-tuning these models, making it challenging to fully comprehend the extent of intricacies unless you are a highly experienced software engineer actively engaged in this field.

The answer: Dynamic Prompting

Our engineers’ breakthrough innovation was the creation of Dynamic Prompting, a mechanism designed to eliminate the quagmire of prompt engineering.

What is Dynamic Prompting?

Avaamo’s Dynamic Prompting emerged from the necessity to simplify developers’ lives and provide an abstraction layer that facilitates the construction of LLM agents. LLaMB alleviates enterprise developers from the burdens of creating, managing, and updating prompts, as well as constructing custom libraries for various use cases. With Avaamo LLaMB, developers are completely relieved of prompt creation tasks and are not required to worry about the nuances or versions of LLM necessary for prompt building. Dynamic Prompting represents a novel approach to interaction design, dynamically generating prompts in real-time based on user behavior, context, enterprise in/out data, and enterprise identity and access control rules. This approach adjusts and customizes prompts to deliver an immersive, streamlined, and personalized user experience.

The anatomy of a dynamic grounded prompt

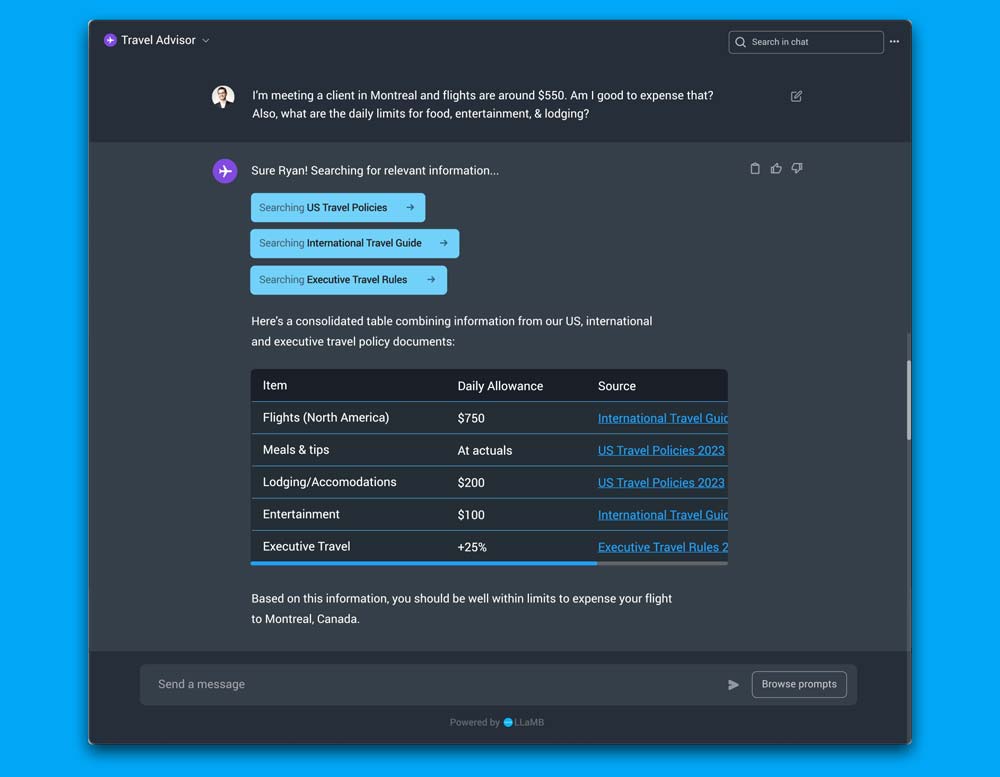

Let’s take a real-world example to see dynmaic prompts in action. The following screenshot is of an AI Travel Advisor built in the LLaMB framework:

Travel Advisor Agent – Built with LLaMB

In the above example, let’s assume all the relevant enterprise data, travel policy documents, and meta-data have been loaded in the system (that is a topic of the next blog) and focus on how the dynamic prompt is constructed in this example.

You might think this is a good case for Prompt chaining but it really doesn’t work for most enterprise virtual agent use cases given the time it takes to execute such complex chained prompts and the associated costs. Avaamo’s patent-pending LLaMB engine creates a single dynamic prompt that generates this response; shown in the consolidated table format which combines information from the US, International and Executive travel policies — all within a few seconds.

All these various facets of the interaction are utilized during the pre, post, and dynamic creation of this prompt. The table below lists some of these parameters going into a dynamic prompt:

Dynamic Prompt parameters

Why it matters

Persona

What persona should the agent take (e.g., a travel assistant)

Prior questions

LLM are stateless and each request is considered unique – this does not work for a conversational agent. To respond to any question, Avaamo’s dynamic prompting layer reconstructs the user query to ensure all the past interactions about Ryan (the employee) around travel are considered.

User Identity

To ensure access management and personalization. In this case, which role Ryan has, which organizations he belongs to and the associated travel policies.

Language

Supporting 100+ languages mean the prompts need to be made aware of language specific terms and allowing for mixed language and phrases.

Content access control

Ensuring the right content for the right user. In this travel use case, only the US travel policies that Ryan has access and permissions to.

Content meta data

Is utilized to ensure latest and appropriate content is utilized. In this case, the latest version of the travel policy

Sentiment

Understanding the sentiment of the conversation (not just the query) and ensure the response keeps that in account.

Tone

How to deal with certain tones and what output to generate.

Format of response

Making sure the response is in the best possible format (table, bullet points, summarized phrases) based on the question, past interactions, and preferences. In this case, a consolidated dynamic table was the best choice.

PII masking

Both identifying and masking and ensuing the context of the PII information is not lost. No information about Ryan or the organization is sent to the LLM.

Abbreviations and business taxonomy

Specific terms, relationships, and references are used in the construction of the response.

Alternate responses

How to pick the right response when alternate responses exists. In the Travel Advisor case, picking from three different content sources.

Source links

Citing references to the right internal and external source(s).

Brand identity

Preserving brand and organization guidelines.

While this list isn’t exhaustive, it highlights several aspects of dynamically grounded prompts that LLaMB generates instantly based on user queries, providing consistent, personalized, and accurate responses—all typically accomplished in three seconds or less and at minimal cost per generated response.

Our customers are realizing that scaling up a Generative AI agent is fundamentally different from conducting a Proof-of-Concept on a local host. As this realization sets in, we’re thrilled to offer breakthrough solutions and features that simplify, reduce costs, and enhance the efficiency of deploying Generative AI in enterprise settings.