01 Dec Your language or mine?

Over the course of the last 4 years, I have talked to hundreds of global enterprise customers who are in various phases of their Conversational AI journey. As part of the discussion, the conversation invariably veers towards “Multi-language” support. Given the sample set of customers that we work with, most of them being Global 500 giants with global footprint, supporting multiple languages to provide a personalized experience to their users is critical.

However, what I realized is that speaking multiple languages is just the tip of the iceberg; truly providing a multi-lingual experience goes well beyond that. I have pieced together a set of questions/comments that I have heard from enterprises which form the core requirement set for multi-lingual support for our platform.

“We operate in 180 countries” – CIO, large global telco operator

In one of the first meetings with the CIO of a large telco customer, we heard him demand “we are a truly global company operating in 90% of the countries in the world and want this technology deployed globally”. It became clear to me that the breath of language and dialect support is an absolute must-have to drive higher adoption of the virtual assistants.

We spent the time to build out Avaamo’s advanced dialog models for sentiment, intent and workflows that support 114 languages and dialects, whether its Albanian, Greek or Malagasy. Further, spoken and written languages vary by country, region and culture and the ability to support German, Swiss German and French German with regional variation make Avaamo’s dialog models unique in the industry.

“Can you support Hinglish, Spanglish, Singlish?

What about Swiss German?”– Chief Customer Officer, life insurance organization

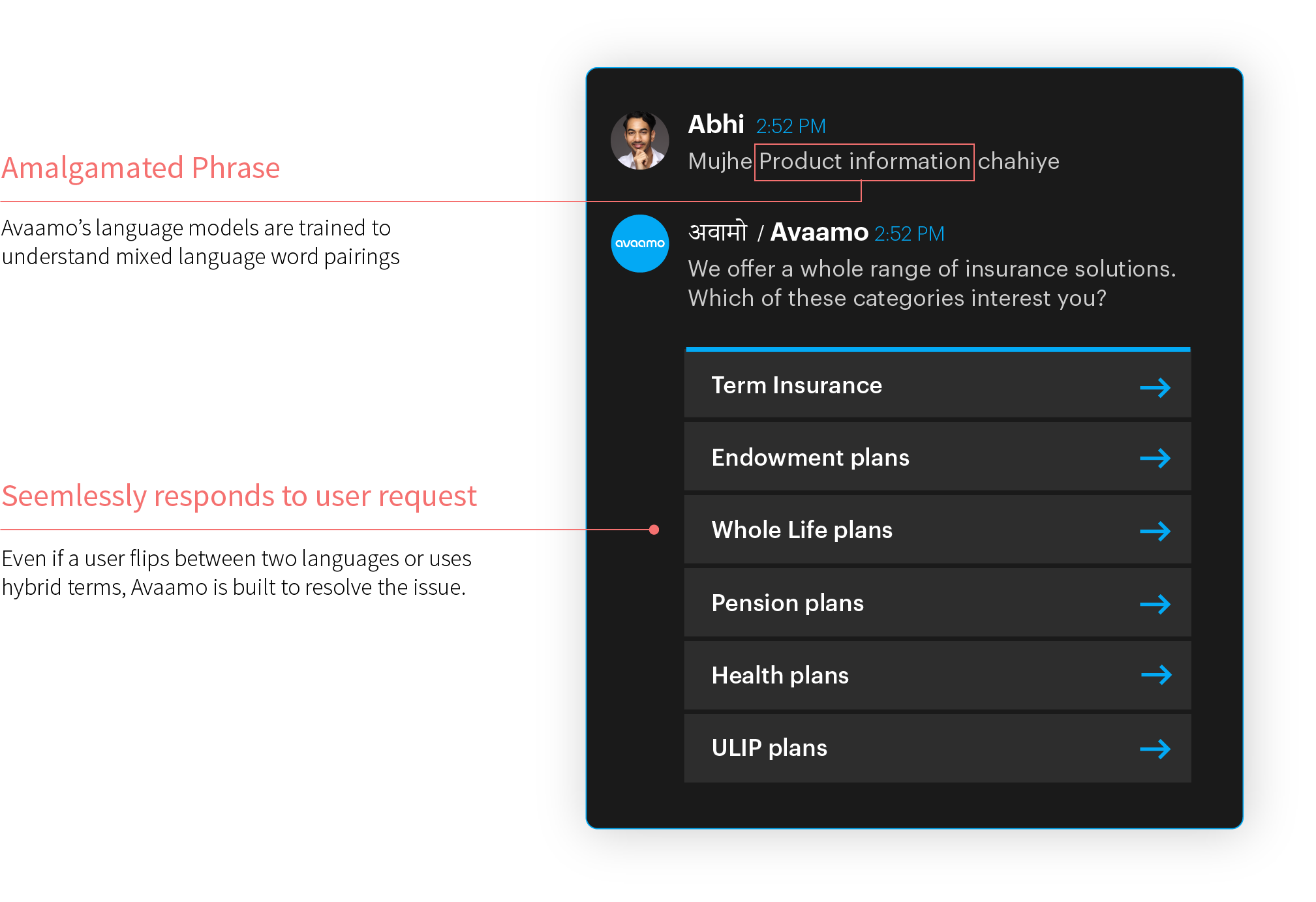

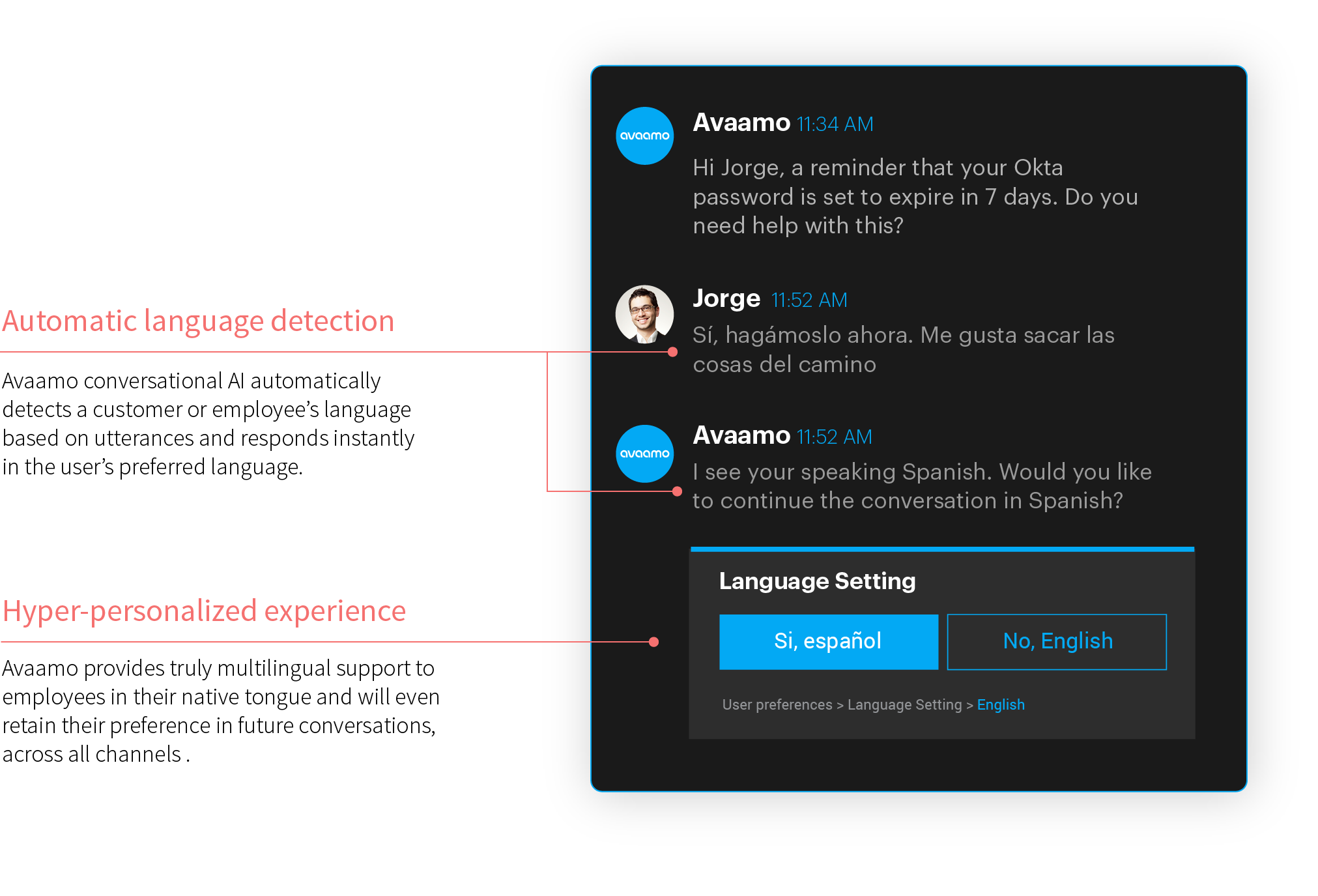

Customers expect to interact with the virtual agent similar to how they interact with human agents. In Asian countries as well as in some part of Europe, it’s important to support mixed language where a single sentence might have amalgamated phrases from 2 or 3 different languages.

Avaamo’s ML Team spent the time working with some of our early adopters including a large Asian Insurance company in designing language models that can support mixed language word pairings and achieving over 85% accuracy responding to millions of queries each month.

“I want my English-speaking agent team to support customers in 40+ languages” – VP, Call Center Ops, BPS provider

It is also very common for enterprises to have their support team based in one or two key regions and support their global users/customers from Centralized hub. One of the interesting requirements we heard from a European giant is to use the Conversational AI as an interpreter. This reduces the pressure in the current Covid environment to hire Agents with multiple language speaking skills and allows the end-users to communicate in their local language with English-speaking agents.

In situations when the virtual agent has to handover to live agents, we adopted an interesting real-time bi-directional translation technique that allowed the agents to respond to queries from users in any language. We were also able to take some of these agent responses and feed it back into the training models for the virtual agent to learn and respond and improve accuracy in multiple languages.

“Hey…wait a second…my developers only know English. How do I develop multilingual applications?” – Project Manager, healthcare provider

In most cases, the development team doesn’t really have any expertise in the multiple language that the virtual agent is expected to support. It’s critical that the platform has the relevant tooling that allows teams to validate the content and conversation and has slew of inbuilt templates to be able to support conversations in various languages.

For a large European Insurance company, the virtual agent was expected to support 20+ languages but the development team was primarily English speakers. Using our tools helped them to collaborate with content creators and product owners to ensure the content was accurate in the respective language as well as any language-specific nuances were appropriately incorporated in the training.

“My documents are all in English, but my users are Chinese.”

– IT Manager, pharma and life science org

When handling use-cases which involve finding information from content, the platform not only needs to provide for precise results based on user queries in different languages but also summarize the response in the language of the user.

A pharmaceutical organization had just rolled out an new expense management product globally and all the support and documentation for the product was in English, however for its employees in Chinese we were able to provide a virtual assistant that not only understood questions in Chinese but was able to find the appropriate section of the document as response from the original English documents and provide a simple way for users to translate them in real time.

Google translate…meh – Chief Customer Experience Officer, Large US healthcare provider

Beyond the basic small talk, Google translate really doesn’t cut it especially for enterprise use-cases that are so domain specific. It’s important to have domain experts participate in defining the language-specific dictionaries and contextually utilize them rather than using a literal translation.

For a US healthcare provider who wanted to support various patient care cases in Spanish we allowed for the development for custom dictionary to ensure the virtual assistant truly understood the user queries and responded back with a very high level of accuracy.

“With this other vendor I had to do rebuild the virtual assistant in every new language” – Project Lead, Virtual Assistant COE, IT

The learning and training part of the virtual agent is critical. We had to ensure there was “transfer learning” across languages. For a truly multi-lingual experience we also ensure the learning based on user interaction across one language is passed on to other language training models.

For an Asian Insurance organization that provides policy-related services in 30+ languages, it’s an impossible task to keep the training data and utterances updated across all languages. Our unique patented “language modeling” technique using transfer learning allows for the training to be transferred across languages. This means streamlined management as you only need to update the training utterances in one language and reduced cost for ownership.

“Do I have to start all over again for Voice and IVR as I expand beyond digital channels?” – Product Manager, US banking industry

No not really. One of the key benefits of the Avaamo platform is true omni-channel support as the intent training and learning phrases are will become part of the newly created voice models for IVR or Voice based assistants.

Also, with our unique, domain-specific entity templates we have pre-trained intents for both voice and digital that significantly reduces the time and effort that it takes to develop the virtual agent voice channel.

In summary

In summary, When people talk rather than type, Multilingual support goes well beyond rudimentary support of double byte characters which has been the software support standard for type based input for 30+ years.. Conversational Ai platforms need to go much further- recognize tonality, cadence, word pairings, intonations which change by language, culture and regional dialects. Domain based phrases, abbreviations and idiomatic variation further complicate conversational AI comprehension.

With these enhancements announced today, we believe we have raised the Conversationa AI industry bar to listen, understand comprehend and respond intelligently to human conversations in 114 languages and dialects . Many thanks to the Avaamo product teams who spent years listening to our customers and designing the enhancements to solve the complexity of real world variations, while simultaneously bringing down the cost of ownership with thoughtful tooling and transfer learning capabilities.